TL;DR:

- We introduce a new ability for AG2 agents, Graph RAG with FalkorDB, providing the power of knowledge graphs

- Structured outputs, using OpenAI models, provide strict adherence to data models to improve reliability and agentic flows

- Nested chats are now available with a Swarm

FalkorDB Graph RAG

Typically, RAG uses vector databases, which store information as embeddings, mathematical representations of data points. When a query is received, it's also converted into an embedding, and the vector database retrieves the most similar embeddings based on distance metrics.

Graph-based RAG, on the other hand, leverages graph databases, which represent knowledge as a network of interconnected entities and relationships. When a query is received, Graph RAG traverses the graph to find relevant information based on the query's structure and semantics.

Advantages of Graph RAG:

-

Enhanced Contextual Understanding Graph RAG captures the relationships between entities in the knowledge graph, providing richer context for LLMs. This enables more accurate and nuanced responses compared to traditional RAG, which often retrieves isolated facts.

-

Improved Reasoning Abilities The interconnected nature of graph databases allows Graph RAG to perform reasoning and inference over the knowledge. This is crucial for tasks requiring complex understanding and logical deductions, such as question answering and knowledge discovery.

-

Handling Complex Relationships Graph RAG excels at representing and leveraging intricate relationships between entities, allowing it to tackle complex queries that involve multiple entities and their connections. This makes it suitable for domains with rich interconnected data, like healthcare or finance.

-

Explainable Retrieval The graph traversal process in Graph RAG provides a clear path for understanding why specific information was retrieved. This transparency is valuable for visualizing, debugging and building trust in the system's outputs.

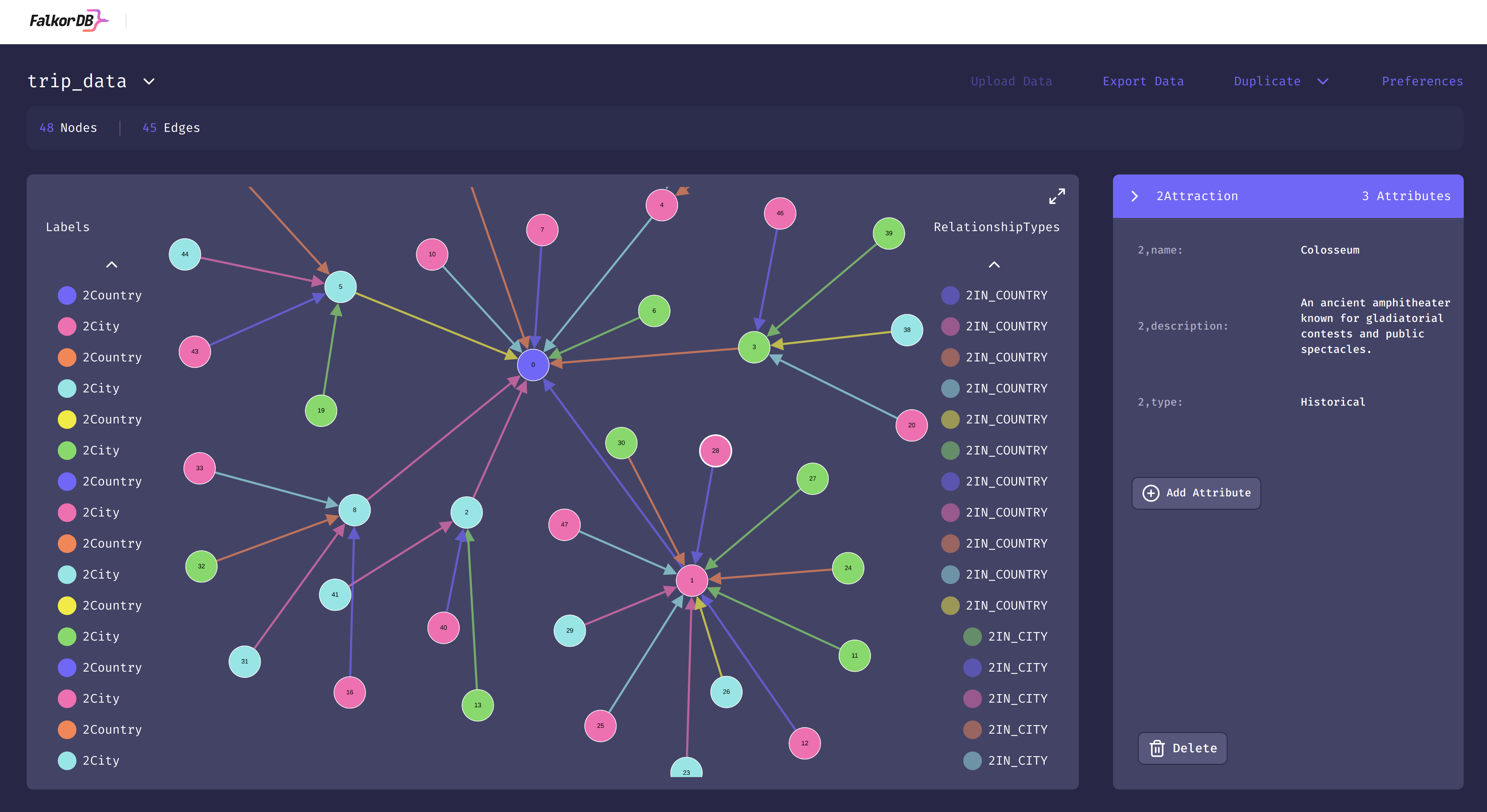

FalkorDB Graph RAG capabilities

FalkorDB is a high performant graph database enabling queries with reduced hallucinations.

In release 0.5, AG2 has added the ability to add FalkorDB Graph RAG querying capabilities to an agent. These agents will behave like other agents in an orchestration but will query the FalkorDB and return the results as a response.

An LLM is incorporated into this capability, allowing data to be classified during ingestion, queries to be optimised, and results to be provided back in natural language.

See the FalkorDB docs for how to get a database setup.

Below is a simple example of creating a FalkorDB Graph RAG agent in AG2. A data file of web page on the movie The Matrix is ingested into the database and the knowledge graph is created automatically before being queried. Data file here.

For example:

import os

import autogen

config_list = autogen.config_list_from_json(env_or_file="OAI_CONFIG_LIST")

os.environ["OPENAI_API_KEY"] = config_list[0]["api_key"] # Utilised by the FalkorGraphQueryEngine

from autogen import ConversableAgent, UserProxyAgent

from autogen.agentchat.contrib.graph_rag.document import Document, DocumentType

from autogen.agentchat.contrib.graph_rag.falkor_graph_query_engine import FalkorGraphQueryEngine

from autogen.agentchat.contrib.graph_rag.falkor_graph_rag_capability import FalkorGraphRagCapability

# Auto generate graph schema from unstructured data

input_path = "../test/agentchat/contrib/graph_rag/the_matrix.txt"

input_documents = [Document(doctype=DocumentType.TEXT, path_or_url=input_path)]

# Create FalkorGraphQueryEngine

query_engine = FalkorGraphQueryEngine(

name="The_Matrix_Auto",

host="172.18.0.3", # Change

port=6379, # if needed

)

# Ingest data and initialize the database

query_engine.init_db(input_doc=input_documents)

# Create a ConversableAgent

graph_rag_agent = ConversableAgent(

name="matrix_agent",

human_input_mode="NEVER",

)

# Associate the capability with the agent

graph_rag_capability = FalkorGraphRagCapability(query_engine)

graph_rag_capability.add_to_agent(graph_rag_agent)

# Create a user proxy agent to converse with our RAG agent

user_proxy = UserProxyAgent(

name="user_proxy",

human_input_mode="ALWAYS",

)

user_proxy.initiate_chat(

graph_rag_agent,

message="Name a few actors who've played in 'The Matrix'")

Here's the output showing the FalkorDB Graph RAG agent, matrix_agent, finding relevant actors and then being able to confirm that there are no other actors in the movie, when queried.

user_proxy (to matrix_agent):

Name a few actors who've played in 'The Matrix'

--------------------------------------------------------------------------------

matrix_agent (to user_proxy):

Keanu Reeves, Laurence Fishburne, Carrie-Anne Moss, and Hugo Weaving are a few actors who've played in 'The Matrix'.

--------------------------------------------------------------------------------

user_proxy (to matrix_agent):

Who else acted in The Matrix?

--------------------------------------------------------------------------------

matrix_agent (to user_proxy):

Based on the provided information, there is no additional data about other actors who acted in 'The Matrix' outside of Keanu Reeves, Laurence Fishburne, Carrie-Anne Moss, and Hugo Weaving.

--------------------------------------------------------------------------------

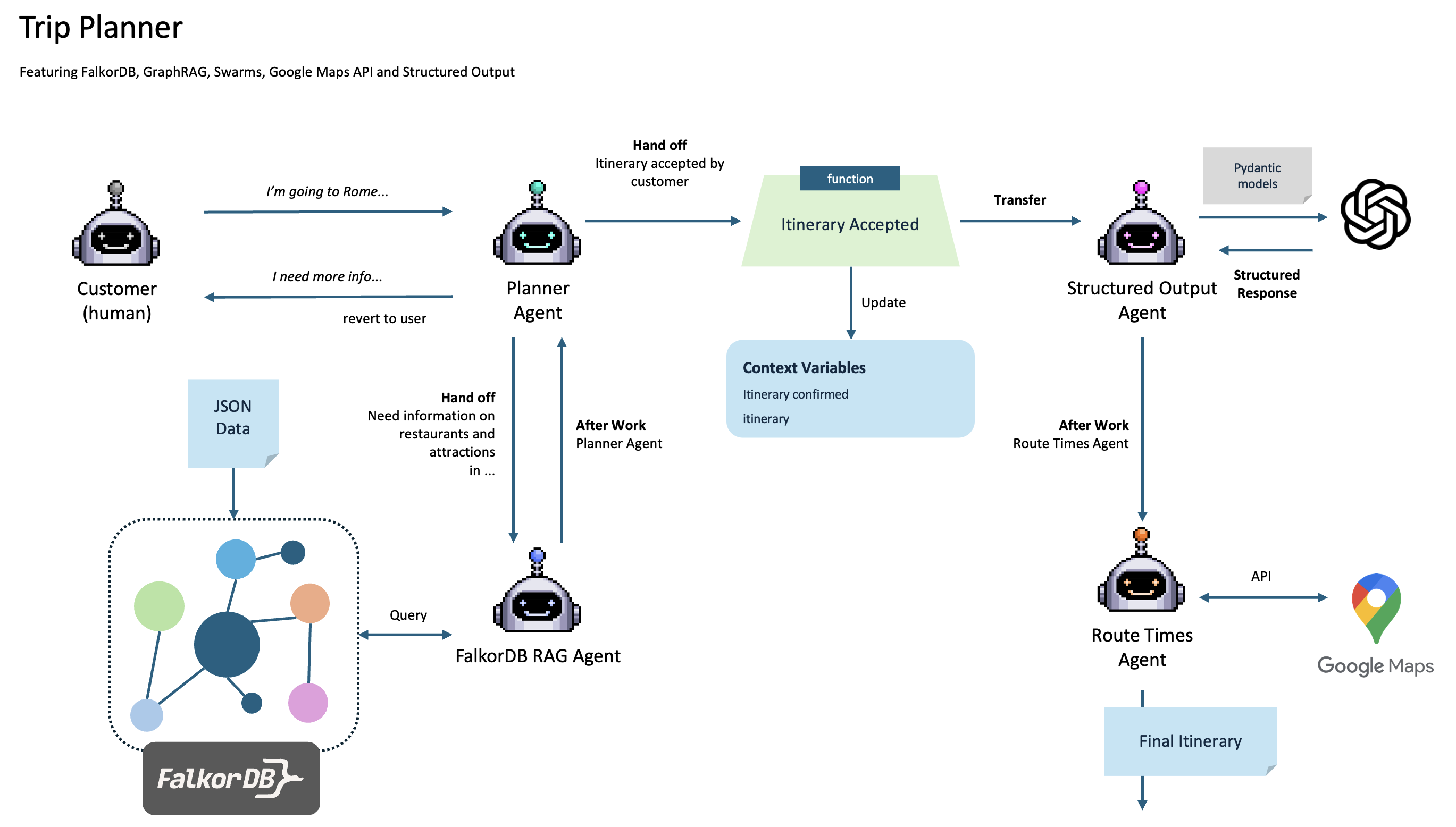

For a more in-depth example, see this notebook where we create this Trip Planner workflow.

Structured Outputs

Also featured in the Trip Planner example above, AG2 now enables your agents to respond with a structured output, aligned with a Pydantic model.

This capability provides strict responses, where the LLM provides the data in a structure that you define. This enables you to interpret and validate information precisely, providing more robustness to an LLM-based workflow.

This is available when using OpenAI LLMs and is set in the LLM configuration (gpt-3.5-turbo-0613 or gpt-4-0613 and above):

from pydantic import BaseModel

# Here is our model

class Step(BaseModel):

explanation: str

output: str

class MathReasoning(BaseModel):

steps: list[Step]

final_answer: str

# response_format is added to our configuration

llm_config = {

"config_list":

[

{

"api_type": "openai",

"model": "gpt-4o-mini",

"api_key": os.getenv("OPENAI_API_KEY"),

"response_format": MathReasoning

}

]

}

# This agent's responses will now be based on the MathReasoning model

assistant = autogen.AssistantAgent(

name="Math_solver",

llm_config=llm_config,

)

A sample response to how can I solve 8x + 7 = -23 would be:

{

"steps": [

{

"explanation": "To isolate the term with x, we first subtract 7 from both sides of the equation.",

"output": "8x + 7 - 7 = -23 - 7 -> 8x = -30."

},

{

"explanation": "Now that we have 8x = -30, we divide both sides by 8 to solve for x.",

"output": "x = -30 / 8 -> x = -3.75."

}

],

"final_answer": "x = -3.75"

}

See the Trip Planner and Structured Output notebooks to start using Structured Outputs.

Nested Chats in Swarms

Building on the capability of Swarms, AG2 now allows you to utilise a nested chat within a swarm. By providing this capability, you can perform sub-tasks or solve more complex tasks, while maintaining a simple swarm setup.

Additionally, adding a carry over configurations allow you to control what information from the swarm messages is carried over to the nested chat. Options include bringing over context from all messages, the last message, an LLM summary of the messages, or based on a own custom function.

See the Swarm documentation for more information.

For Further Reading

- Trip Planner Notebook Example Using GraphRag, Structured Output & Swarm

- Documentation about FalkorDB

- OpenAI's Structured Outputs

- Structured Output Notebook Example

Do you have interesting use cases for FalkorDB / RAG? Would you like to see more features or improvements? Please join our Discord server for discussion.